Algorithmic Bias and Discrimination: The Role of Law in Ensuring Fairness in Automated Decision-Making

Introduction: When Algorithms Become Judges

Algorithms silently shape our everyday lives—from determining our social media feeds to deciding who qualifies for a loan or even gets hired. These automated decision-makers promise efficiency and objectivity, but beneath their seemingly neutral facade lies a troubling truth: algorithms can be biased, perpetuating and even exacerbating discrimination. Enter the critical role of law in mitigating these biases and ensuring fairness.

Understanding Algorithmic Bias

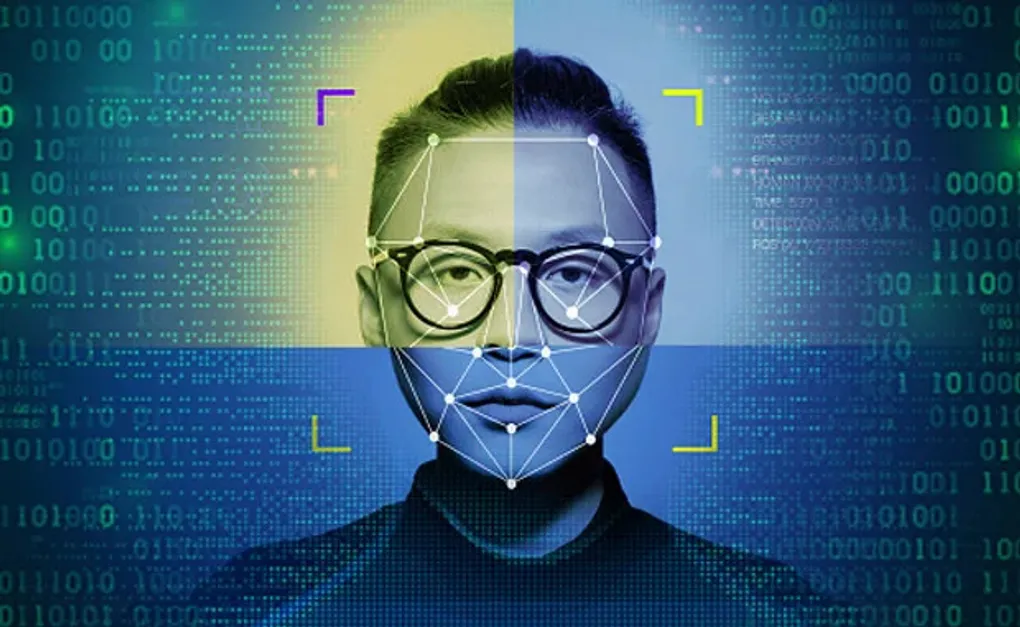

Algorithmic bias occurs when automated systems produce systematically prejudiced results due to flawed data, biased programming, or unintentional human oversight. Examples abound: facial recognition technologies failing to recognize people of color accurately, or hiring algorithms disproportionately excluding qualified female applicants.

Real-World Case Studies: Bias in Action

One notorious example was Amazon’s recruitment AI, which inadvertently learned biases from historical data, disproportionately favoring male applicants for technical roles. Another was the COMPAS system in the U.S., designed to predict criminal recidivism but found to unfairly penalize minority groups.

Why Do Algorithms Become Biased?

Algorithmic bias typically emerges from:

- Biased Training Data: Algorithms reflect historical inequalities embedded in data.

- Algorithm Design Flaws: Developers may inadvertently introduce biases based on their implicit assumptions.

- Feedback Loops: Algorithms reinforce existing biases by continually learning from biased outcomes.

Legal Frameworks for Fairness: Current State of Play

Australian law, similar to other jurisdictions, currently addresses algorithmic bias indirectly through a patchwork of anti-discrimination and privacy legislation:

- Racial Discrimination Act 1975: Prohibits discriminatory practices based on race.

- Sex Discrimination Act 1984: Protects individuals from gender-based discrimination.

- Privacy Act 1988: Regulates how personal information is collected, used, and disclosed.

Yet, these laws were not originally designed with algorithms in mind, leaving significant gaps.

International Approaches: Lessons from Abroad

Countries worldwide are taking novel approaches to tackle algorithmic bias:

- EU AI Act: Sets stringent requirements for high-risk AI systems, mandating transparency, fairness assessments, and ongoing monitoring.

- United States: Proposals for Algorithmic Accountability Acts seek to mandate bias audits for algorithms in sensitive sectors like hiring, lending, and healthcare.

Australia can benefit by adopting similar proactive, explicit regulatory approaches.

Strategies for Legal Reform in Australia

To address algorithmic bias effectively, Australia could:

-

Mandate Transparency and Accountability: Require algorithm audits and impact assessments for high-risk AI systems.

-

Enforce Clear Standards for Fairness: Develop specific legislative criteria defining fairness and non-discrimination in automated decisions.

-

Encourage Diverse and Inclusive Data Sets: Legally require AI developers to demonstrate efforts to mitigate bias through diverse training data.

The Ethical and Social Dimensions

Beyond legislation, it’s crucial to foster a broader cultural shift towards ethical AI development, including public education and ethical training for AI professionals. Ethical AI frameworks, alongside laws, can effectively guide developers towards equitable and responsible automated decision-making.

Conclusion: Crafting a Fair Algorithmic Future

Algorithmic bias isn’t merely a technical glitch—it’s a profound societal issue demanding urgent attention. The law plays a pivotal role in safeguarding fairness and protecting against digital discrimination. By crafting targeted legal interventions and fostering ethical practices, Australia can ensure that algorithms serve society fairly, transparently, and equitably, shaping a more inclusive digital future.

Comments

Sign in to leave a comment